For years, NTLM has been the go-to protocol for attackers abusing authentication in traditional Active Directory environments. From relay attacks to password cracking and token manipulation, NTLM has seen its fair share of abuse. But as organisations shift to Microsoft’s cloud-first world—namely Azure AD—the battleground is changing.

So, the question being: Are Azure Access and Refresh Tokens becoming the new NTLM for attackers?

Lets take a look:

Access & Refresh Tokens – A Quick Primer

In Azure AD, authentication is no longer just about usernames and passwords. Instead, it’s about tokens. When a user successfully authenticates, Azure issues two important artefacts:

- Access Tokens: Short-lived (typically 1 hour) tokens used to authenticate to specific services (e.g., Graph API, Azure Resource Manager).

- Refresh Tokens: Longer-lived tokens (typically 90 days with sliding expiration) that can be used to obtain new access tokens without prompting the user to re-authenticate.

This modern authentication model is part of the OAuth2 and OpenID Connect stack and is deeply embedded in Microsoft’s cloud identity framework.

But here’s the catch: these tokens are just bearer tokens. Possess them, and you can use them. No passwords required.

What Makes Them Similar to NTLM?

Just like NTLM hashes, Azure tokens:

- Can be stolen and reused.

- Are used to authenticate without user interaction.

- Can be passed between systems or APIs.

- Are vulnerable to similar relay-style attacks in some flows (e.g., Device Code).

They’re not cryptographically bound to the user’s device (unless using newer Proof-of-Possession (PoP) tokens, which aren’t widely enforced, these essentially bind to a client device), which makes access and refresh tokens ripe for abuse in the same way as NTLM hashes once were.

Tooling: Weaponising the Token Landscape

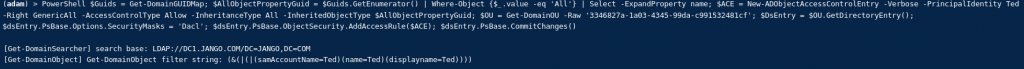

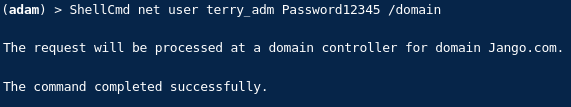

Red Teamers and Pentesters now have a growing arsenal of tools to enumerate, manipulate, and reuse tokens within Azure AD environments. I have been recently using the below coupled with native tooling such as MS Graph and Azure Resource Manager where we can directly pass in the token as authentication:

- TokenTactics – A modular toolkit for interacting with and abusing Azure access/refresh tokens. Perfect for token acquisition, manipulation, and reuse.

- GraphySpy – Automates post-exploitation enumeration of Azure AD using valid access tokens, mapping out user privileges and group memberships.

Key Attack Vectors in Azure AD

Attackers now have multiple avenues to acquire and exploit tokens:

1. Device Code Phishing

The device code flow is designed for headless devices but has become a phishing vector. The user is tricked into authenticating via https://microsoft.com/devicelogin using a benign-looking code. Once completed, the attacker receives a refresh token linked to the victim’s session.

2. FOCI Tokens and Token Reuse

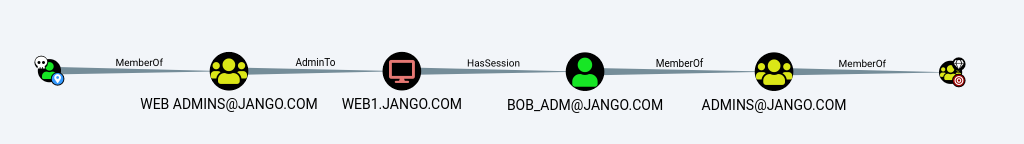

FOCI (Family of Client IDs) tokens are refresh tokens shared across multiple first-party Microsoft apps. If you obtain one, you can request access tokens for other services (e.g., from Teams to Graph to Azure Resource Manager), enabling lateral movement across cloud services.

3. Token Replay for Lateral Movement

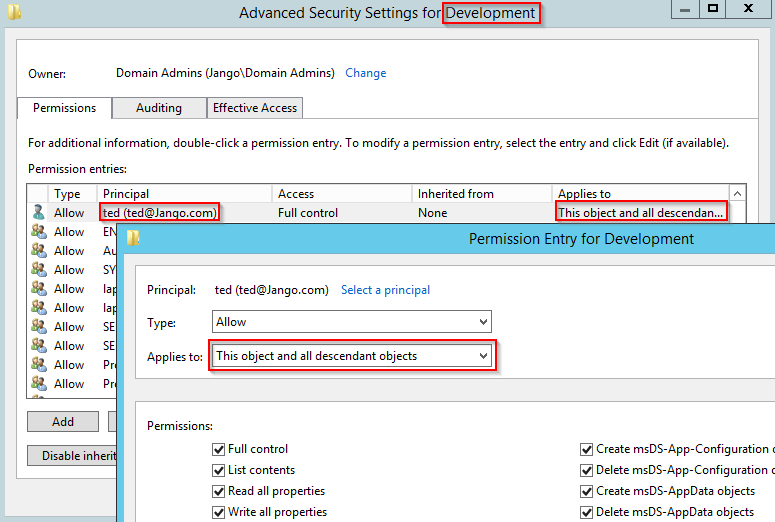

Unlike NTLM relays in on-prem networks, attackers in Azure environments can replay access tokens across APIs, or exfiltrate refresh tokens and generate access to other services. This can be combined with enumeration techniques to identify other service principles and potentially high-privilege accounts with broader access.

4. Misconfigured Conditional Access Policies

Some Conditional Access setups allow token replay from unmanaged or untrusted devices, bypassing traditional device compliance controls. Attackers can replay tokens from their own systems without triggering alerts or prompts.

The Next NTLM?

As defenders harden NTLM usage and shift identity to Azure AD, attackers are following close behind. The abuse of Azure access and refresh tokens is quickly becoming the new frontier of identity-based attacks. We’re already seeing early signs of this trend in red team operations, with tokens increasingly providing the foothold or pivot needed to escalate access within cloud environments.

Final Thoughts

Token-based attacks aren’t theoretical anymore—they’re practical, real-world techniques that work right now in many Azure AD environments. Like NTLM before them, access and refresh tokens are becoming a focal point for both offensive security research and defensive detection engineering.

Expect to see this landscape mature rapidly over the coming months/years. And if your detection stack is still focused on failed logins and brute-force attempts, it’s time to start watching your token grants, consent flows, and refresh token usage instead.